Today’s New York Times article on rapid online repricing by holiday retailers depicts a retail world starting to approach the “flash-trading” status of financial markets:

Amazon dropped its price on the game, Dance Central 3, to $24.99 on Thanksgiving Day, matching Best Buy’s “doorbuster†special, and went to $15 once Walmart stores offered the game at that lower price. Amazon then brought the price up, down, down again, up and up again — in all, seven price changes in seven days.

And:

The parrying could be seen with a Nintendo game, Mario Kart DS.

A week before Thanksgiving, the retailers’ prices varied, with Amazon selling it at $29.17, Walmart at $40.88, and Target at $33.99, according to Dynamite Data. Through Thanksgiving, as Target kept the price stable, Walmart changed prices six times, and Amazon five. On Thanksgiving itself, Walmart marked down the price to its advertised $29.96, which Amazon matched.

This made me think about a Greg Hannsgen post from last May on the Levy Economics Institute’s Multiplier Effect blog, a post I’ve been meaning to write about. It looks at the pricing mechanism based on how fast prices change/adjust.

The especially interesting thing about this post: It uses a dynamic simulation model to display the effects of slower and faster price adjustments, and lets you run the model yourself, right on the page, by moving a slider to change the speed of price adjustment and watch the results. (You need to install a browser plug-in from Wolfram, which only worked for me in Firefox under Mac OS X 10.6.8; it failed in Chrome.)

The gist:

You wonder what will happen when markets finally start working. How about, for example, a market that changes prices and wages quickly in response to fluctuations in demand? …

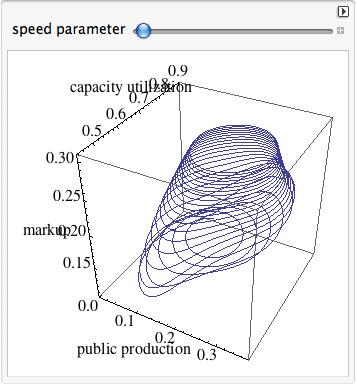

The pathway shown in the figure just below is followed by public production, capacity utilization, and the markup.

As you move the lever to the right, you are increasing a parameter that controls the speed at which the markup changes in response to high or low levels of customer demand.

What happens as the speed parameter is increased is that the economy’s pathway gradually changes until there is a relatively sudden vertical jump in the middle and much higher markup levels at the end–which means a bigger total rise in capital’s share.

Then:

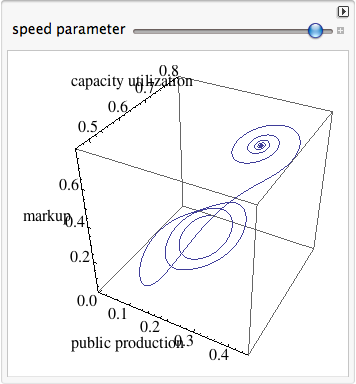

The next pathway is the one followed by a second group of three variables during the same simulation. This second group includes: money, the government deficit (surpluses are negative numbers in this figure), and the employment rate (total work hours divided by total hours supplied).

when the lever is all the way to the right, the pathway begins with an outward spiral, leading to a new inward spiral, and finally an employment “crash†of sorts that occurs as the center of the second spiral is reached. This occurs after the markup has reached very high levels, as seen in the earlier diagram.

That’s a pretty amazing set of conclusions that I don’t think could ever have emerged from mainstream economic modeling techniques. You’ll notice that equilibrium, in particular, is a decidedly problematic concept here. It only seems to emerge when things have gone off the rails and hit the edge of the known world — not in those comfortable middle grounds so fondly envisioned by mainstream models.

I am making no claims of validity for this model’s assumptions, techniques, or conclusions (though I find the conclusions fascinating and plausible) — that’s beyond me. What I want to talk about is the validity of this class or type of dynamic simulation model compared to those commonly employed in “textbook” economic analysis.

In particular it makes me think about some recent posts and comments by Nick Rowe that are (bless him) teaching posts at least partially in response to my constantly demonstrated inability to properly grasp those standard approaches. (No: I’m not being ironic.)

Nick laid out the textbook understanding of the pricing mechanism based on marginal cost of production with wonderful clarity and broad insight here. I want to suggest that the explanation’s key feature is its use of “short-term” and “long-term.” It’s all about time.

But looking at the figures above — which model time fluidly and continuously (realistically?) as opposed to depicting it in two vaguely defined “chunks” — I want to ask whether Nick’s explanation, and the models employed in that explanation, are sufficient (or even proper) to grasp the processes playing out in the marketplace. Could they predict the effects that we see above? Is that type of depiction and prediction even within their inherent realm of capability?

Yes, Hannsgen’s model employs some textbook constructs, but it deploys them in a model that is structurally, qualitatively different from “comparative static” textbook models. Maybe the modeling technique is the message. Or at least, the proper modeling technique is a necessary condition for imparting an accurate and useful message.

Reading Nick’s posts and those of other smart econobloggers and -commenters, I am constantly astounded at his (their) apparent ability to intuitively comprehend and mentally manipulate multidimensional (and multiconceptual) interplays that leave me flummoxed. But I still wonder: is that ability sufficient for Nick to representatively model, in his head — to intuitively understand — the complex interplay of factors at work? His frequent comments about holding one factor constant, and the importance and difficulty of simultanaeity in our thinking, suggest that the answer might be no.

When you add a minimum wage to a free-economy model, is he able to simulate, in his head, all the possibly resultant pathways through the multidimensional space of prices, labor inputs, capital inputs, and output quantities (not to mention redistribution feedback effects, or utility-related factors), all over time — a space where no factors are held constant?

Yes, I’m questioning Nick’s quantitative ability given the models employed to do this kind of simulation in his head. (Suggesting: it’s not just me!, though there is certainly a matter of degree.) But as a result, and also, I’m questioning the qualitative ability of those models as employed to enable such understanding in our limited minds (notably, mine).

As I said recently, science is about really understanding how things work — telling a convincing causative story — not (just) predicting what will (might) happen. (At the extreme, you could say that prediction is only useful for scientists as a test of understanding.) The textbook models seem to provide some predictive power, but you gotta wonder how much of that is false positives. And given that question, you have to ask how well they really “explain” how economies work — how much true understanding they provide.

In other words — back to my apparently congenital inability to really internalize and understand the textbook models — it’s not my fault! (Yes: now I am being ironic.)

All this raises one big question for me: why aren’t mainstream economists all over these kinds of dynamic simulation models? Why do we only see them at the fringes, in work by “heterodox” outsiders like Hannsgen and Keen, and in the ever-about-to-emerge work promised by the guys at the Santa Fe Institute? Why aren’t big, and (within limits) out-of-the-box thinkers like Nick, Scott Sumner, Tyler Cowen, etc. — people who show every indication of really wanting to understand — fascinated by the possibilities of this type of modeling? In the weather and climate business, textbook economic modeling techniques would be laughed out of the room. Are not economies of a similar complex, dynamic, emergent type with weather and climate systems — arguably even more so, and more complex, because economies include the game-theory grist of conscious intentions and expectations (about other people’s intentions and expectations)?

I recently corresponded with a econ Phd candidate who really wanted to work with and build such models for his thesis. He reported that everything about the institutional and intellectual structure of academic economics militated against his doing so. “Just grab a data set, build a model and pull some regressions, call it good and head for the tenure track.”

You don’t have to read Kuhn or Marx to wonder whether this isn’t the result of 1. the irresistible intellectual gravitational pull of institutionally sanctioned models however obviously flawed (miasma, phlogiston, epicycles, equilibrium), and 2. the undeniable (inherent?) effectiveness of existing textbook economic models and modeling techniques in perpetuating and amplifying the established power structures and (increasingly) unequal distribution of income and wealth — wealth that actively seeks to perpetuate and expand itself via institutional structures like universities. (No names, just initials: Mercatus Center.)

I’m not imputing moral corruption here (except perhaps institutional). I both prefer and tend to believe that most of us try to do right, “as God gives us to see the right.” Rather, I tend toward the quite plausible institutional explanation laid out so nicely by Chomsky in Manufacturing Consent. In my words: institutions that are dependent on, are part and parcel of, those larger structures of power and wealth, only hire and promote people who already — perhaps by their very nature — see “right” right. (Or right “right.”)

And who knows? They might be right. Maybe my personal incentive is just to show (myself?) how smart I am relative to the mainstream institutions. But based on the Aha! moments of intuitive understanding that I experience when I see and explore models like Hannsgen’s, I tend to doubt whether that’s the only thing at play.

Cross-posted at Angry Bear.

Comments

4 responses to “Modeling the Price Mechanism: Simulation and The Problem of Time”

Two points: First, savvy consumers who visit price comparison sites know that prices vary widely across sellers at any time and they can also see from the graphs that price fluctuates over time, with the bottom often at this time of the year. Retailers are capturing volume of sales and market share in periods of heavy buying. These periods do not reflect the “going price.”

As someone familiar with retail and goods trading I can also report that there is something called “the real price.” It is the price that vendors will take and be satisfied with. The “going price” is just an anchor to make the real price seems attractive when it comes along in sales, especially at high volume times like this. And, of course, in wholesale markets it is all about finding the real prices. It’s safe to say, I think, that most buyers don’t arrive at the real price. Only very skilled and tenacious buyers are able to smoke out crafty sellers.

One reason that higher prices are possible is transaction cost. Unless one is a volume buyer of the same or similar items, it is just not worthwhile doing the research, paying an extra shipping cost, etc. So I am skeptical of talking of “the market price” in many goods markets, even food. There is a whole myth of “the market.” This is even true in places were there are still bazaars, where one would expect open competition to get to the real price quickly. It just does not happen in practice. Anyone who buys seriously in such markets knows this.

These are reasons that goods markets remains fundamentally different from financial markets operated on centralized exchanges with electronic reporting of changes in marginal prices available globally instantaneously. BTW, it was not this way when I was trading stocks for myself small time as a grad student back when. I quit because the transaction cost was to high. The digital age has changed everything wrt financial markets, greatly increasing transparency and transaction cost.

And, of course, in oligopoly markets there is rampant price setting rather than price discovery. Many goods markets are oligopoly markets and it just looks like sellers are competing hard with each other when it is not the case. They are using similar strategies, and no one is revealing the real price.

@Tom Hickey “The digital age has changed everything wrt financial markets, greatly increasing transparency and transaction cost.”

Tom, I’m very interested to hear more. Obviously the explicit trading costs — the commissions — have declined. But if transaction costs include the research etc., those explicit costs are a trivial part. Can you explain more why you say this?

@Asymptosis

My bad. I meant to write “increasing transparency and decreasing transaction cost.” For the small trader, the research cost used to be significant in terms of time and money, for instance. There were no computers then and one had to purchase research, mostly market letters, and make one’s own charts by hand, for example. Of course, if one wanted to “do the math” that was definitely a big cost of time in the absence of computing power. Commissions were also a significant transaction cost for small traders who had no negotiating power.

Moreover, transparency was pretty low, too. The analysts catered to the large accounts. One was stuck talking a broker who was not making a lot of money talking to you. Guess how well that went. Some people were even stupid enough to listen to their recommendations and trade on them.

Things have really changed since at era, first, with the advent of computers, and then with the Internet. There are even pretty small traders that have access to Bloombergs now. Even specialists didn’t have this amount of info in real time bank then, although, of course, they knew their own market better than anyone else.

So while financial markets are not perfect by any means, they are a lot more accessible to almost anyone than they used to be. I don’t know that this has affected the percentage of rubes tho. But the point is that the financial markets are much different from the goods markets in that the objective is transparency and it is being increased, while transaction cost is decreasing due to technological innovation.

Yet, even here, the power has increased vastly but the cost may even have increased somewhat. Many small traders pack a lot of computing power and buy pretty costly applications. These apps are customizable, so they spend a lot of time doing that, too. So while it is true that to be at the level that small traders were back then is lot less costly, traders probably spend as much if not more today for vastly superior power.

Conversely, goods markets are designed not to be transparent, and some markets are heavily dependent on lack of transparency. If many Americans realized what a lot of the mark-ups actually are, they would be floored. This cannot exist in a truly competitive market, and while a lot of these markets are highly competitive they are not truly competitive, because no one wants to kill the goose that lays the golden egg. I am not suggesting that there is conspiratorial collusion, but there is tacit collusion around a price that can be extracted, and it depends on keeping buyers in the dark about what is going on behind the curtain. It also involves a lot of cognitive and behavioral psychology in creating the market.

Steve,

“All this raises one big question for me: why aren’t mainstream economists all over these kinds of dynamic simulation models? Why do we only see them at the fringes, in work by “heterodox†outsiders like Hannsgen and Keen, and in the ever-about-to-emerge work promised by the guys at the Santa Fe Institute?”

Isn’t that the exact opposite of what we see?

Mainstream (macro)economics is *all* about dynamic simulation models, and there is very little of it at the fringes–with a few honourable exceptions like ginitis or rosser.

“In the weather and climate business, textbook economic modeling techniques would be laughed out of the room.”

Are you comparing like with like here? What textbook are you reading?

Mankiw, for e.g., is an intro textbook for attention-deficit teenagers on hugely over-subscribed courses. It’s not supposed to be cutting edge stuff. How does it compare to weather and climate textbooks aimed at a similar demographic? Conversely, how do more cutting edge economic models compare to cutting edge weather and climate models?